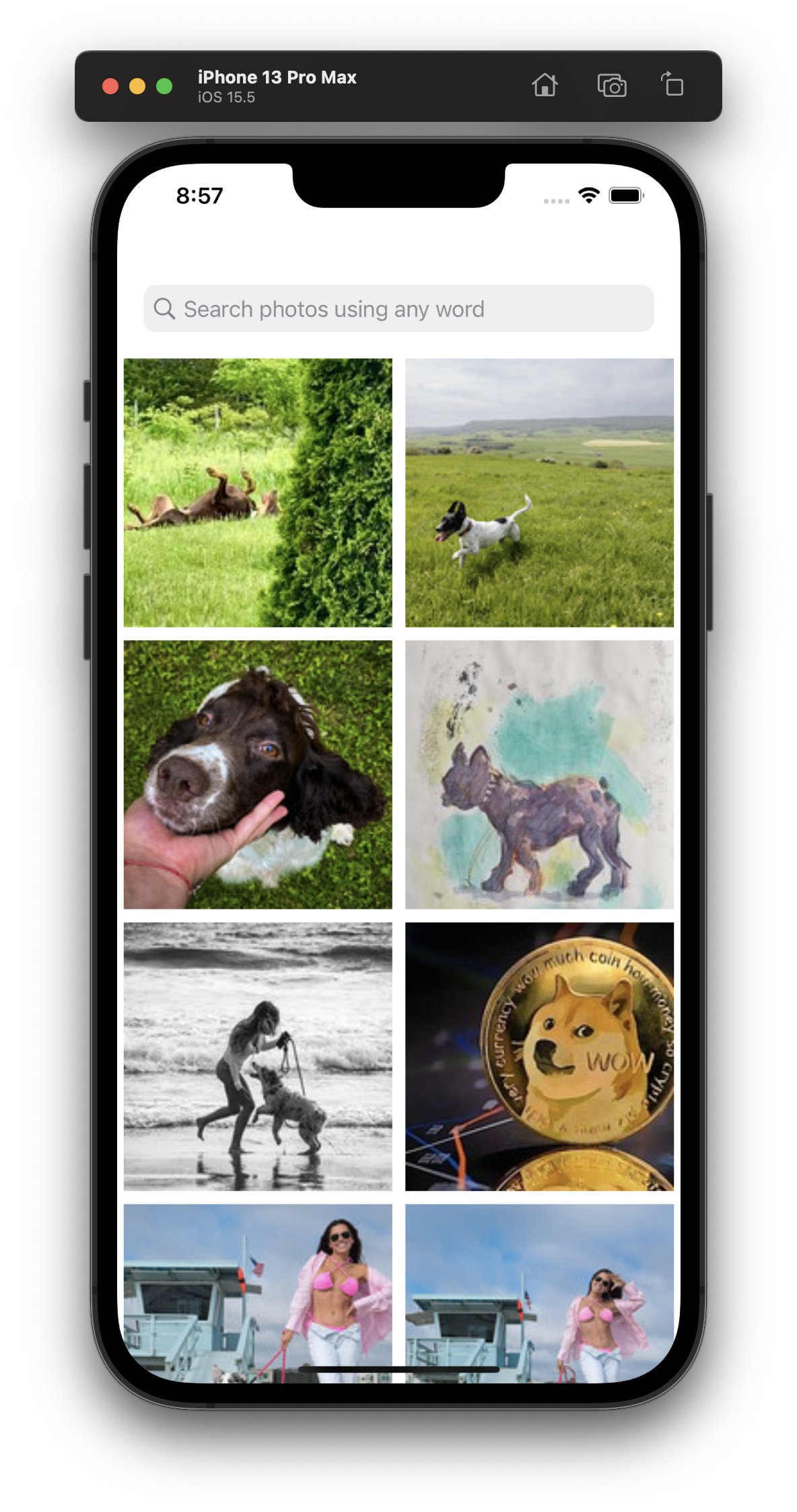

A Mobile Text-to-Image Search Powered by AI

A minimal demo demonstrating semantic multimodal text-to-image search using pretrained vision-language models.

Features

- text-to-image retrieval using semantic similarity search.

- support different vector indexing strategies(linear scan and KMeans are now implemented).

Screenshot

Install

- Download the two TorchScript model files(text encoder, image encoder) into models folder and add them into the Xcode project.

- Simply do 'pod install' and then open the generated .xcworkspace project file in XCode.

pod install

Todo

- Basic features

- Accessing to specified album or the whole photos

- Asynchronous model loading and vectors computation

- Indexing strategies

- Linear indexing(persisted to file via built-in Data type)

- KMeans indexing(persisted to file via NSMutableDictionary)

- Ball-Tree indexing

- Locality sensitive hashing indexing

- Choices of semantic representation models

- OpenAI's CLIP model

- Integration of other multimodal retrieval models

- Effiency

- Reducing memory consumption of models