A Novel Implementation of LiDAR Mesh Classification and Image Classifiers In Assistive Technology for the Visually Impaired.

Background Information

253 million people worldwide including 20.6 million Americans are visually impaired. Research acknowledges that 42% of these visually impaired people have trouble navigating everyday objects in their environments.

Goal

To alleviate the stressors experienced by the visually impaired when traversing unfamiliar environments by leveraging LiDAR mesh classifications and supplemental image classifiers to non-intrusively relay sufficient descriptions of the surroundings to the user.

How it Works

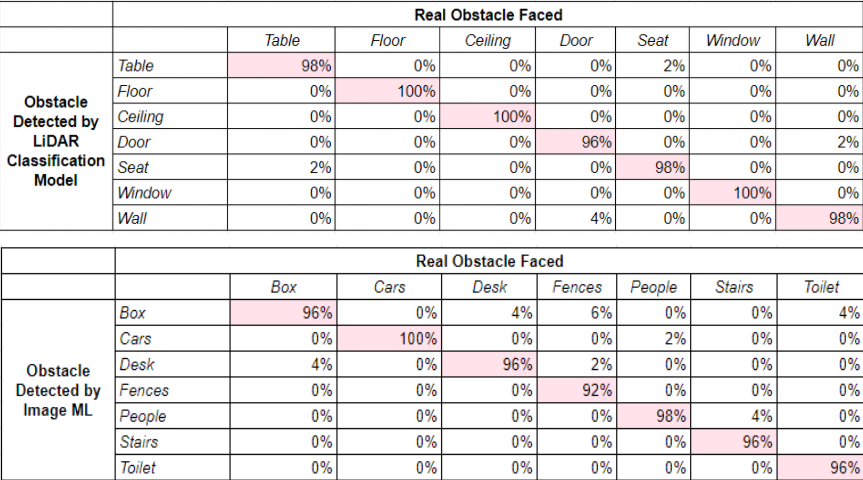

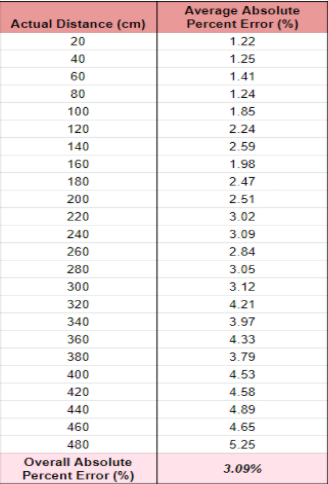

- We used the LiDAR sensor (iPhone 12 Pro) to classify objects that were relatively geometrically simple from a depthmap. The LiDAR sensor doubled as a distance measuring device, capable of measuring object distances up to 5 meters.

- For objects that were more geometrically complex, we used Swift's CoreML library for image classifications.

- Finally, we used Swift's AVFoundation library to non-intrusively relay descriptions of surroundings to the user.

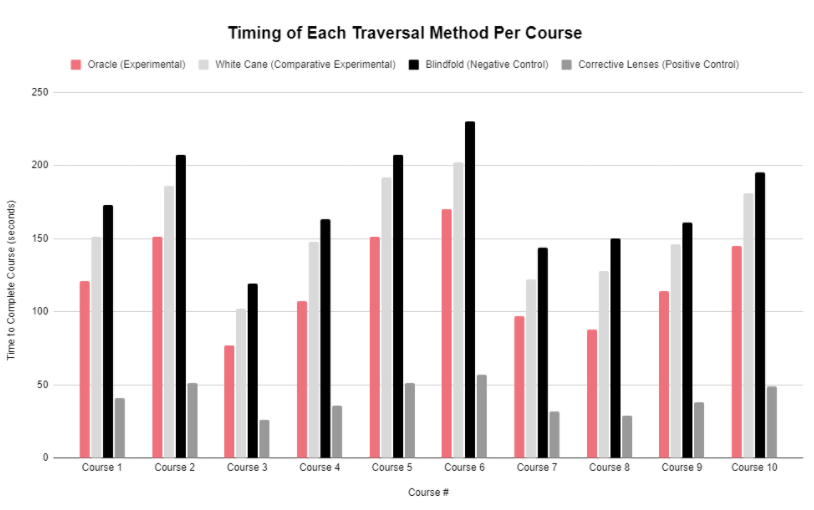

Results

Publication

Partners

Honors & Awards

🏆

- 1st Place in the Georgia Junior Science & Humanities Symposium (as presenter)

- Honorable Mention in the National Junior Science & Humanities Symposium (as presenter)

- Georgia Science and Engineering Fair: Best in Category, Systems Software

- Georgia Science and Engineering Fair: Mu Alpha Theta Award

- 1st Place in the GSMST Science & Engineering Fair

- 1st Place in the Gwinnett County Science and Engineering Fair