Shallow and Deep Convolutional Networks for Saliency Prediction

|

Paper accepted at 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) |

|---|

|

|

|

|

|

|---|---|---|---|---|

| Junting Pan (*) | Kevin McGuinness (*) | Elisa Sayrol | Noel O'Connor | Xavier Giro-i-Nieto |

(*) Equal contribution

A joint collaboration between:

|

|

|

|

|

|---|---|---|---|---|

| Insight Centre for Data Analytics | Dublin City University (DCU) | Universitat Politecnica de Catalunya (UPC) | UPC ETSETB TelecomBCN | UPC Image Processing Group |

Abstract

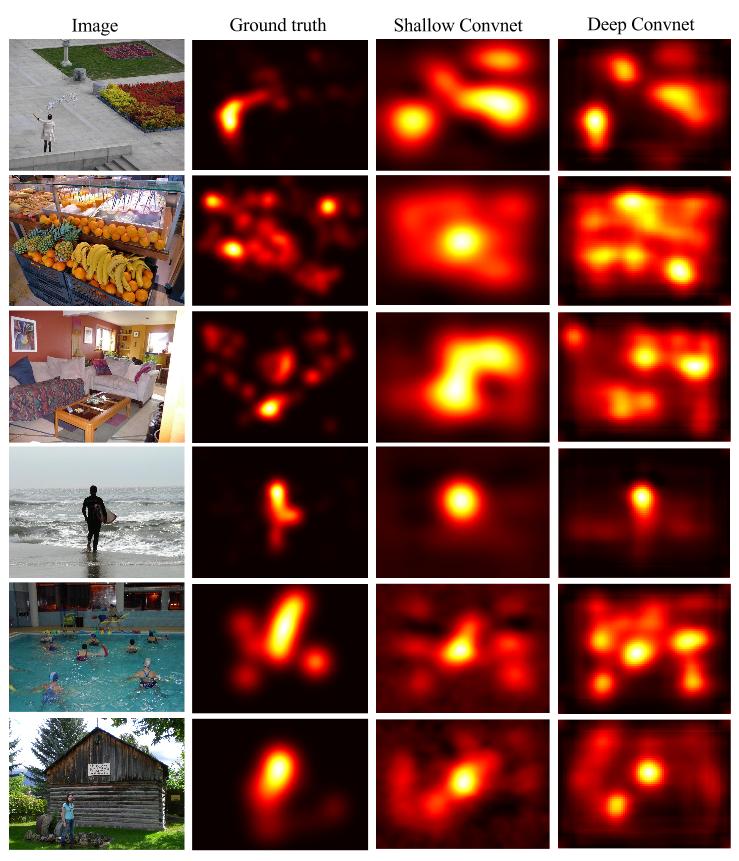

The prediction of salient areas in images has been traditionally addressed with hand-crafted features based on neuroscience principles. This paper, however, addresses the problem with a completely data-driven approach by training a convolutional neural network (convnet). The learning process is formulated as a minimization of a loss function that measures the Euclidean distance of the predicted saliency map with the provided ground truth. The recent publication of large datasets of saliency prediction has provided enough data to train end-to-end architectures that are both fast and accurate. Two designs are proposed: a shallow convnet trained from scratch, and a another deeper solution whose first three layers are adapted from another network trained for classification. To the authors knowledge, these are the first end-to-end CNNs trained and tested for the purpose of saliency prediction

Publication

Our paper is open published thanks to the Computer Science Foundation. An arXiv pre-print is also available.

Please cite with the following Bibtex code:

@InProceedings{Pan_2016_CVPR,

author = {Pan, Junting and Sayrol, Elisa and Giro-i-Nieto, Xavier and McGuinness, Kevin and O'Connor, Noel E.},

title = {Shallow and Deep Convolutional Networks for Saliency Prediction},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2016}

}

You may also want to refer to our publication with the more human-friendly Chicago style:

Junting Pan, Kevin McGuinness, Elisa Sayrol, Noel E. O'Connor, and Xavier Giro-i-Nieto. "Shallow and Deep Convolutional Networks for Saliency Prediction." In Proceedings of the IEEE International Conference on Computer Vision (CVPR). 2016.

Models

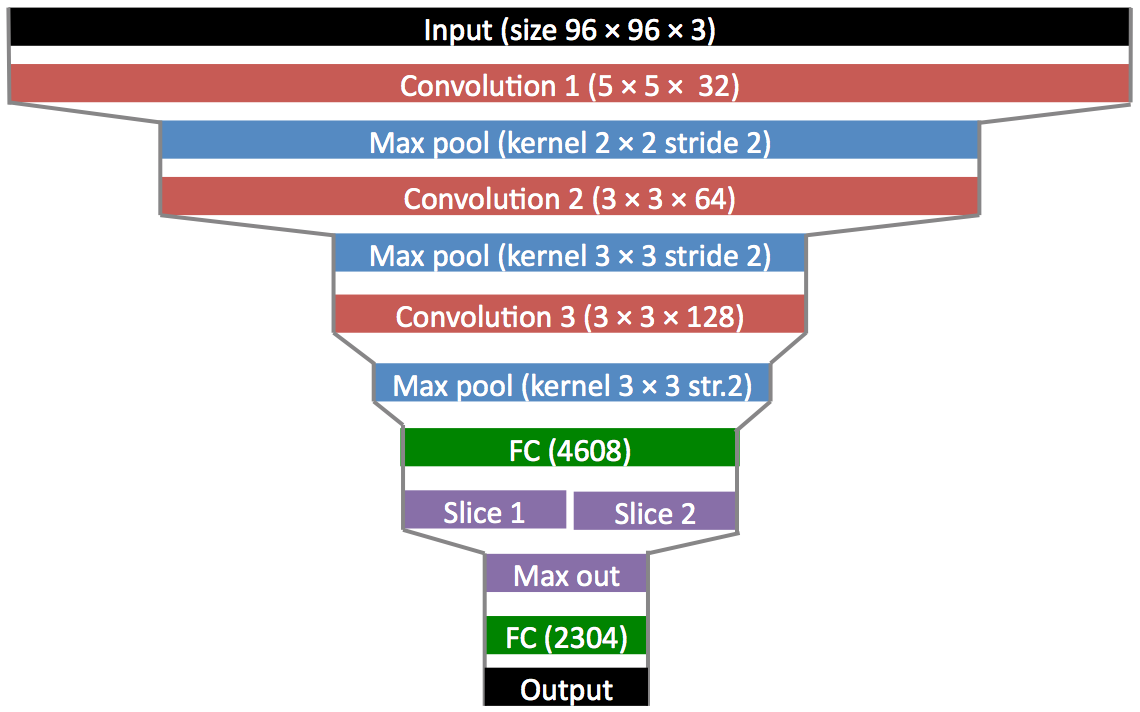

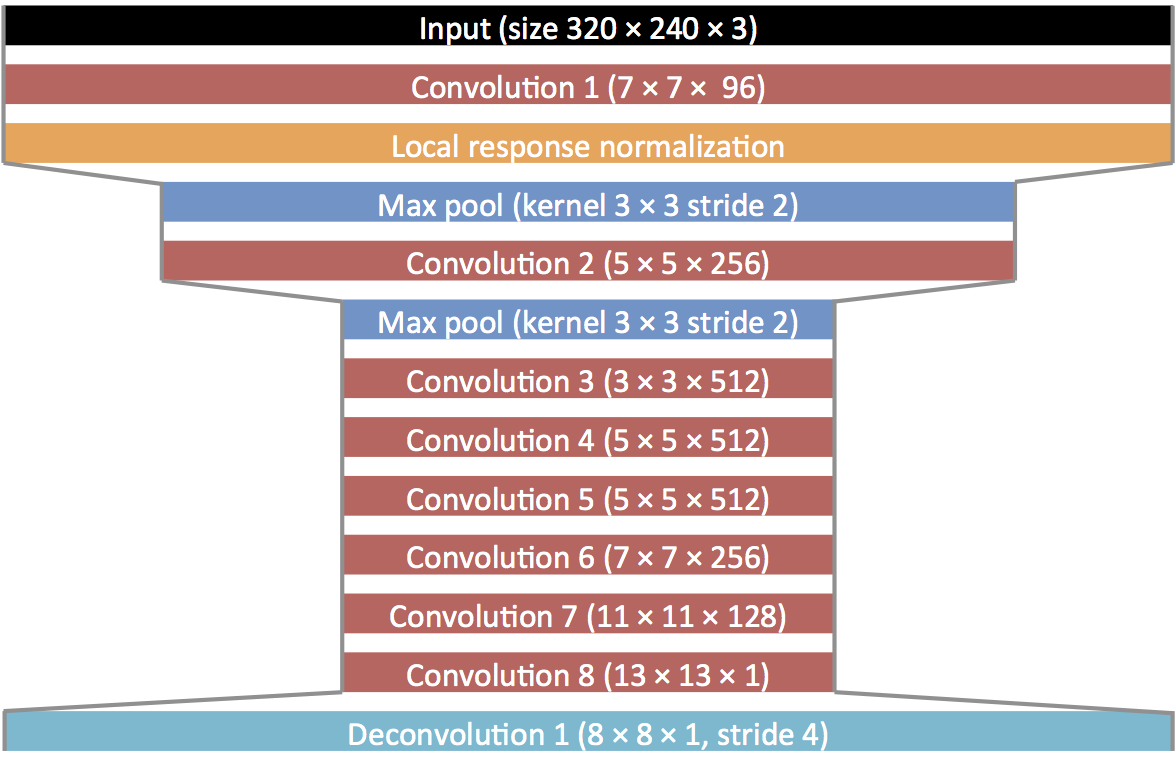

The two convnets presented in our work can be downloaded from the links provided below each respective figure:

| Shallow ConvNet (aka JuntingNet) | Deep ConvNet (aka SalNet) |

|---|---|

|

|

| [Lasagne Model (2.5 GB)] | [Caffe Model (99 MB)] [Caffe Prototxt] |

Our previous winning shallow models for the LSUN Saliency Prediction Challenge 2015 are described in this preprint and available from this other site. That work was also part of Junting Pan's bachelor thesis at UPC TelecomBCN school in June 2015, which report, slides and video are available here.

Visual Results

Datasets

Training

As explained in our paper, our networks were trained on the training and validation data provided by SALICON.

Test

Three different dataset were used for test:

A collection of links to the SALICON and iSUN datasets is available from the LSUN Challenge site.

Software frameworks

Our paper presents two different convolutional neural networks trained with different frameworks. For this reason, different instructions and source code folders are provided.

Shallow Network on Lasagne

The shallow network is implemented in Lasagne, which at its time is developed over Theano. To install required version of Lasagne and all the remaining dependencies, you should run this pip command.

pip install -r https://github.com/imatge-upc/saliency-2016-cvpr/blob/master/shallow/requirements.txt

This requirements file was provided by Daniel Nouri.

Deep Network on Caffe

The deep network was developed over Caffe by Berkeley Vision and Learning Center (BVLC). You will need to follow these instructions to install Caffe.

Posterior work

If you were interested in this work, you may want to also check our posterior work, SalGAN, which offers a better performance.

Acknowledgements

We would like to especially thank Albert Gil Moreno and Josep Pujal from our technical support team at the Image Processing Group at the UPC.

|

|

|---|---|

| Albert Gil | Josep Pujal |

| We gratefully acknowledge the support of NVIDIA Corporation with the donation of the GeoForce GTX Titan Z and Titan X used in this work. |  |

| The Image ProcessingGroup at the UPC is a SGR14 Consolidated Research Group recognized and sponsored by the Catalan Government (Generalitat de Catalunya) through its AGAUR office. |  |

| This work has been developed in the framework of the project BigGraph TEC2013-43935-R, funded by the Spanish Ministerio de Economía y Competitividad and the European Regional Development Fund (ERDF). |  |

| This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under grant number SFI/12/RC/2289. |  |

Contact

If you have any general doubt about our work or code which may be of interest for other researchers, please use the public issues section on this github repo. Alternatively, drop us an e-mail at mailto:[email protected].