Introduction

LRUCache is an open-source replacement for NSCache that behaves in a predictable, debuggable way. LRUCache is an LRU (Least-Recently-Used) cache, meaning that objects will be discarded oldest-first based on the last time they were accessed. LRUCache will automatically empty itself in the event of a memory warning.

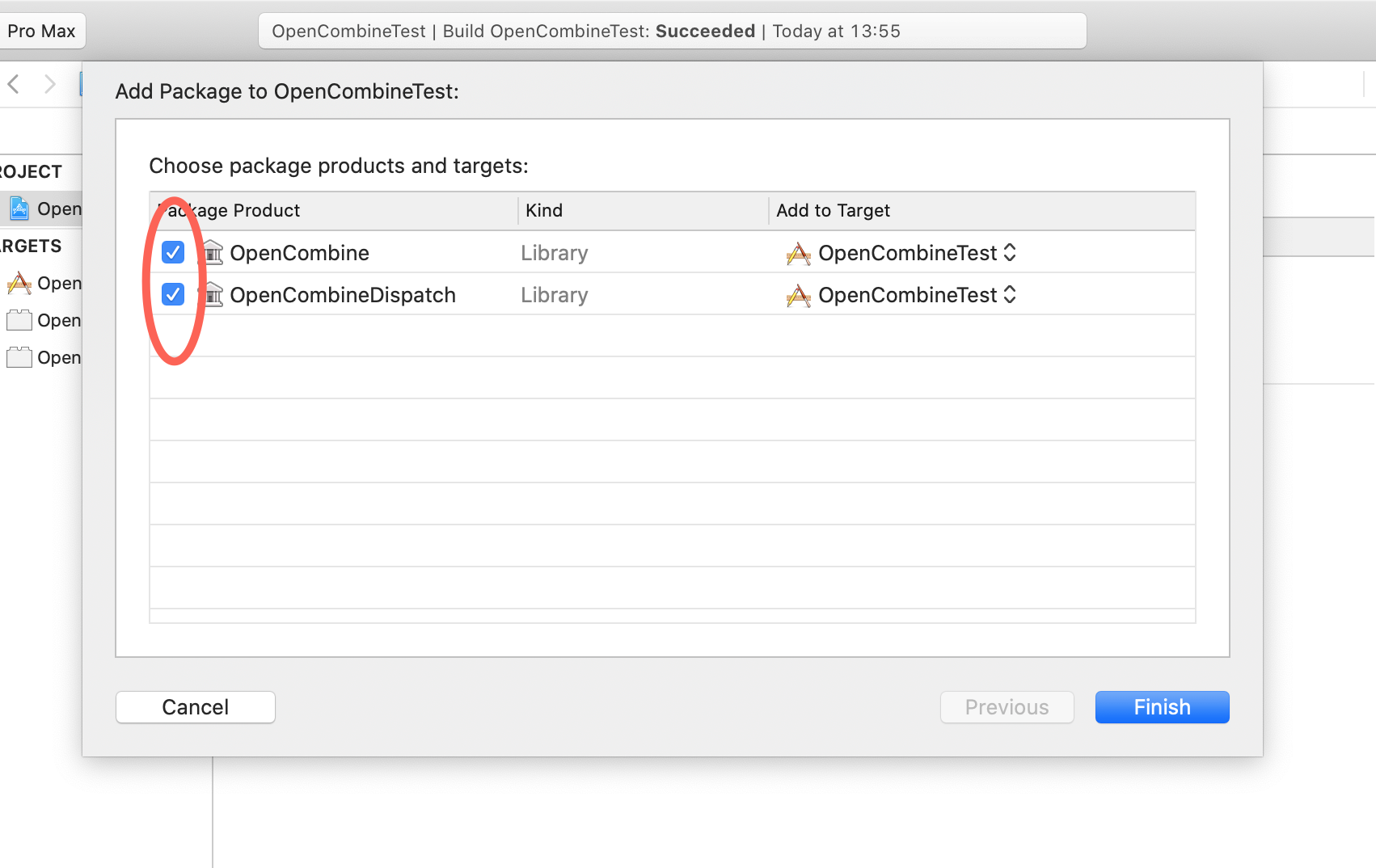

Installation

LRUCache is packaged as a dynamic framework that you can import into your Xcode project. You can install this manually, or by using Swift Package Manager.

Note: LRUCache requires Xcode 10+ to build, and runs on iOS 10+ or macOS 10.12+.

To install using Swift Package Manage, add this to the dependencies: section in your Package.swift file:

.package(url: "https://github.com/nicklockwood/LRUCache.git", .upToNextMinor(from: "1.0.0")),

Usage

You can create an instance of LRUCache as follows:

let cache = LRUCache<String, Int>()

This would create a cache of unlimited size, containing Int values keyed by String. To add a value to the cache, use:

cache.setValue(99, forKey: "foo")

To fetch a cached value, use:

let value = cache.value(forKey: "foo") // Returns nil if value not found

You can limit the cache size either by count or by cost. This can be done at initialization time:

let cache = LRUCache<URL, Date>(totalCostLimit: 1000, countLimit: 100)

Or after the cache has been created:

cache.countLimit = 100 // Limit the cache to 100 elements

cache.totalCostLimit = 1000 // Limit the cache to 1000 total cost

The cost is an arbitrary measure of size, defined by your application. For a file or data cache you might base cost on the size in bytes, or any metric you like. To specify the cost of a stored value, use the optional cost parameter:

cache.setValue(data, forKey: "foo", cost: data.count)

Values will be removed from the cache automatically when either the count or cost limits are exceeded. You can also remove values explicitly by using:

let value = cache.removeValue(forKey: "foo")

Or, if you don't need the value, by setting it to nil:

cache.setValue(nil, forKey: "foo")

And you can remove all values at once with:

cache.removeAllValues()

On iOS and tvOS, the cache will be emptied automatically in the event of a memory warning.

Concurrency

LRUCache uses NSLock internally to ensure mutations are atomic. It is therefore safe to access a single cache instance concurrently from multiple threads.

Performance

Reading, writing and removing entries from the cache are performed in constant time. When the cache is full, insertion time degrades slightly due to the need to remove elements each time a new value is inserted. This should still be constant-time, however adding a value with a large cost may cause multiple low-cost values to be evicted, which will take a time proportional to the number of values affected.

Credits

The LRUCache framework is primarily the work of Nick Lockwood.