SpeechRecognitionIOS

This app demonstrates how to use Google Cloud Speech API and Apple on-device Speech library to recognize speech in live audio recording.

Prerequisites

- An API key for the Cloud Speech API (See the docs to learn more)

- Create a project (or use an existing one) in the Cloud Console

- Enable billing and the Speech API

- Add your iOS bundle identifier to project (e.g com.SpeechRecognition.joshuvi.SpeechRecognitionIOS in my case)

- Create an API key, and save this for later

- An OSX machine or emulator

- Xcode 13 or later - tested with Xcode 13.2

- Cocoapods version 1.0 or later

QuickStart

Ensure you have pods installed on your machine, to check use

pod --version

❗ Do not run this commandpod installbecause pods already exist, see Podfile & Podfile.lock

- clone this repo

git clone https://github.com/Josh-Uvi/SpeechRecognitionIOS.git - cd into this directory

SpeechRecognitionIOS - Open SpeechRecognitionIOS.xcworkspace with Xcode

- In Speech/SpeechRecognitionService.swift, replace

YOUR_API_KEYwith the API key obtained above. - Build and run the app.

- Use command

cmd + Rto build and run on iOS simulator/device - Alternatively, press the play button from xcode to start the build process

- The build process will start up and run on Xcode simulator if you have one configured or on a device if connected

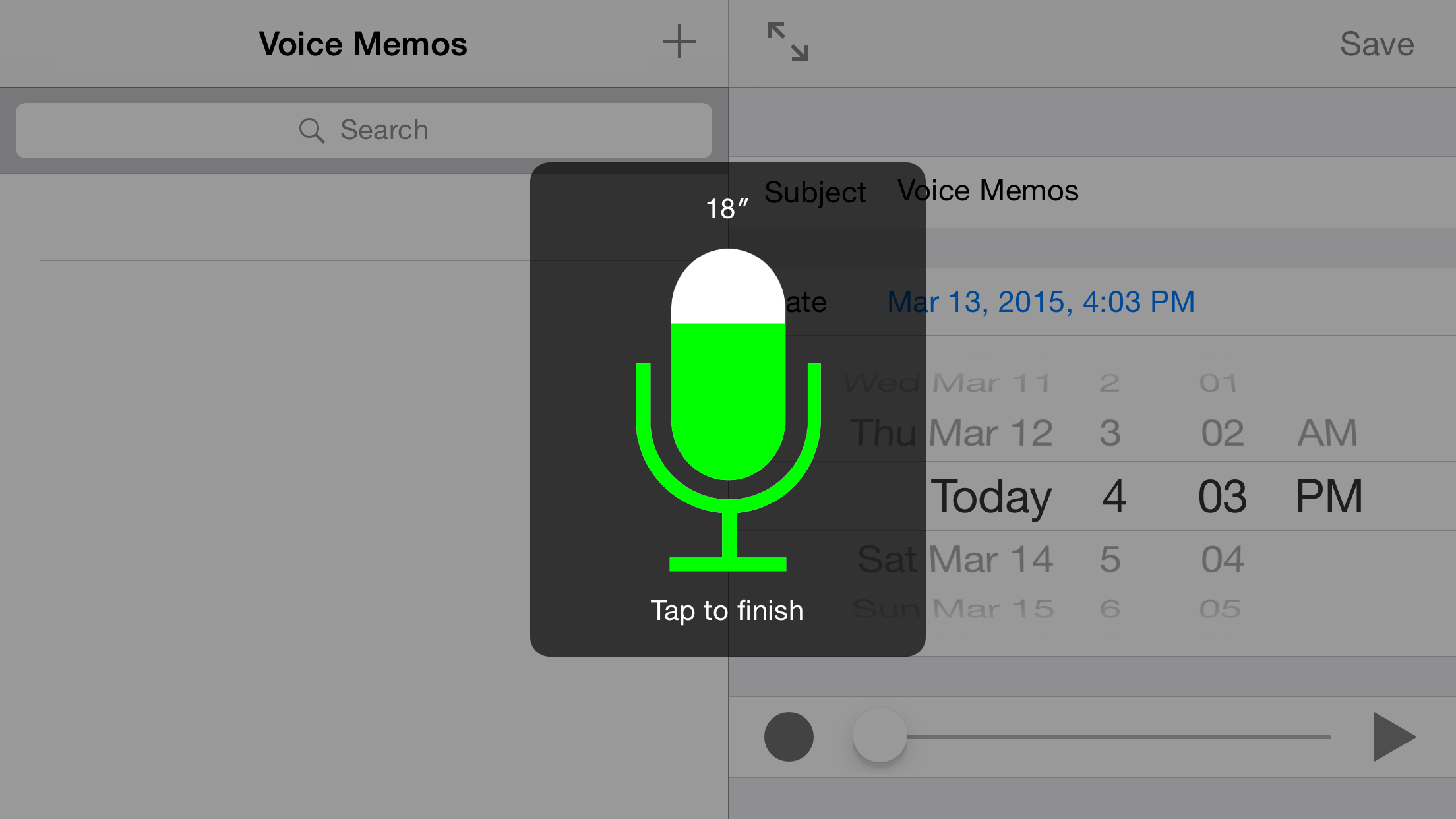

- Say a few words and wait for the display to update when your speech is recognized.

- Use command

DONE

- Apple Speech on-device library

- Google Speech-to-text API

- Life audio recording/streaming

- Offline live audio recording/streaming

TODO

- Pre-recorded audio file

- Display live audio recording on an OLED device

- Embed python script/program in the app, visit Python-Apple-support docs for more info

- Add some test cases i.e unit or E2E test cases

- Add self-hosted Speech Recognition server/engine, visit Kaldi Speech Recognition Server docs for more info

- Add CI/CD pipelines